Deep Dive on OpenAI’s MLE-Bench

Earlier this month (2024/10/10), OpenAI dropped MLE-bench, a benchmark to evaluate AI agents on machine learning engineering. Should human…

Earlier this month (2024/10/10), OpenAI dropped MLE-bench, a benchmark to evaluate AI agents on machine learning engineering. Should human MLEs worry they’ll be replaced by AI soon?

TLDR

1. MLE-Bench indicates OpenAI has set the agenda for AIs to acquire MLE skills.

2. But MLE-Bench might be difficult to take off because of its low replicability (~± 4.4% SD) and prohibitively expensive cost (~$48,000 per metric reading).

3. It’s harder for AI to acquire MLE skills than SWE skills because of limited data.

First, What Is OpenAI’s MLE-Bench?

OpenAI team collects 75 machine learning competitions from Kaggle, and defines human baselines using leaderboards from each competition. Their best-performing setup (OpenAI’s o1-preview model with AIDE scaffolding) achieves bronze+ medal performance in 16.9% of competitions with pass@1. (paper)

The Significance of a Benchmark

Some people may say human MLEs are safe to keep their jobs when AI agents can only get bronze+ medals in 16.9% of the competitions. However, I want to highlight the significance of a benchmark beyond AI’s current performance.

(1) A benchmark is an agenda.

Benchmarks are costly to curate. When a research group creates a benchmark, it’s because they’ve (1) identified this problem space as a priority, and (2) planned to have a long-term investment in this area. The goal is to develop the skills measured by this benchmark.

MLE-Bench, as its name indicates, sets the agenda to train AI agents to do MLE tasks. In other words, for human MLEs, their skillset is on the agenda of OpenAI.

* OpenAI also helps curate a verified version of SWE-Bench. MLE-Bench & SWE-Bench indicate OpenAI’s agenda for software engineering skillsets.

(2) A benchmark is an invitation.

A benchmark invites others to work on the problem. When done right, a benchmark (+ data) can flourish an entire field. The most famous example is perhaps Fei-Fei Li’s ImageNet.

(3) MLE-Bench establishes the legitimacy of AI agents by (1) providing a human performance baseline and (2) enabling a leaderboard.

With the benchmark, it’s easier to justify decisions to (1) choose AI agents over human MLEs (2) choose one AI agent over another AI agent.

Imagine you are a decision-maker and hear the following statement:

- “This AI agent achieves gold medal level performance in 80% of the competitions in MLE-Bench!”

- “This AI agent ranks 1st in the MLE-Bench Leaderboard!”

Benchmarks and the accompanying leaderboard help justify decisions and make claims.

MLE-Bench Deep Dive: Why MLE-Bench Might Be Hard to Take Off

(1) Replicability

The most fatal problem is replicability. A benchmark needs test-retest reliability, meaning that the result should remain relatively stable between different runs of the same model. Low test-retest reliability would undermine the credibility of the benchmark. We don’t want to see when a model claims it can get 50% medals on MLE-Bench, but on another run, it only gets 40%.

Test-retest reliability can especially be an issue for multi-step benchmarks like MLE-Bench, where a tiny difference in early steps can make or break outcomes.

For instance, Table 2 of the paper reports standard errors. o1-preview (with AIDE)’s performance in Any Medal (%) is 16.9 ± 1.1 (SE). If we factor in the 16 seeds and transform SE into Standard Deviation using SD = SE * Sqrt(N_seed). Then o1-preview (with AIDE)’s any Medal (%) is 16.9 ± 4.4 (SD)

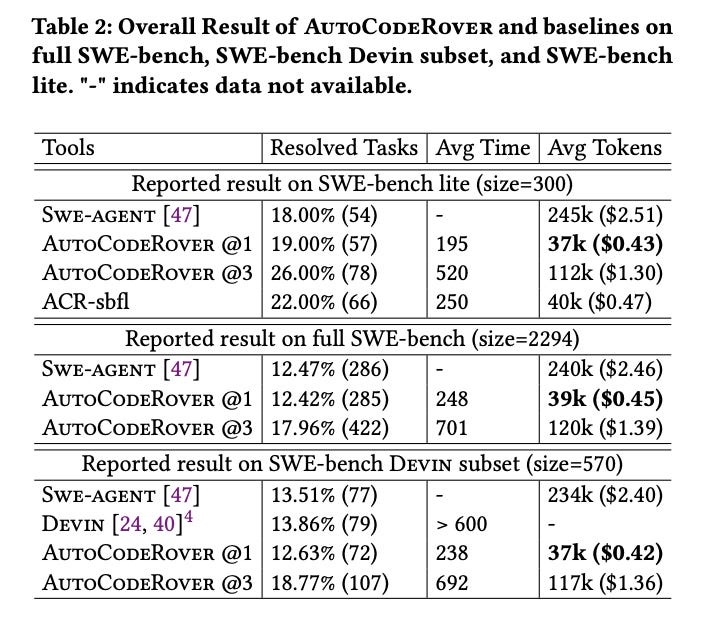

If we have 16 random runs with 16.9 (mean) and 4.4 (SD), the result can go between (11.8%–21.7%). A 10% difference. In another leaderboard (e.g. SWE-Bench lite on 10/25/2024), that’s the difference between #1 vs. #9!

In contrast, SWE-Bench has a much smaller variance. The authors of SWE-Bench note: “average performance variance is relatively low, though per-instance resolution can change considerably.” The Standard Deviation of SWE-Bench is only 0.49 (as compared to MLE-Bench’s 4.4).

That gives us some numbers to calculate task complexity. If we factor in that SWE-bench lite has about x4 items compared to MLE-bench (300 vs. 79) (more items help reduce SD variance by a factor of sqrt(N_items), which is roughly 2 in this comparison). We can estimate the variance difference for single items between MLE-Bench and SWE-Bench, which is (4.4/0.49/2)² ≈ 20. The result indicates that MLE-bench’s tasks are about X20 times more complex (e.g. variance) than SWE-Bench’s tasks.

(2) Costly to run (~$48,000 per metric reading)

The MLE-Bench is costly to run. According to the paper, the best setup (o1-preview with AIDE) in a single run requires (1) 1800 GPU hours of computing, and (2) using 127.5M input tokens and 15.0M output tokens on average for one seed of 75 competitions.

Let’s calculate how much it costs:

GPU (24GB-A10GPU) cost $0.15/hour (from vast.ai): Total cost of GPU = 0.15*1800 = $270

o1-preview input token: $15/M * 127.5M = $1912.5

o1-preview output token: $60/M * 15M = $900

So a single run on the MLE-bench costs ~$3000.

Moreover, if one wants to get a reliable reading by taking multiple runs (e.g. X16 like the paper does), the total cost will be about $48,000 per reading. Even a modest x3 run will cost ~$9,000 per reading.

Yes, a benchmark is an invitation. But this invitation is too expensive to afford.

Similarly, SWE-bench is also expensive, with a single run costing ~$5643 ($2.46 * 2294) on the full SWE-bench, and ~$753 ($2.51 * 300) on SWE-bench lite. The lite version was developed to make the test more affordable, indicating cost is a top concern for LLM benchmarks.

To summarize, MLE-Bench might be hard to take off because of its (1) low replicability + (2) hefty cost.

Another Two Issues

Data Contamination (not fully addressed in the paper)

Data contamination happens when benchmark or test dataset are included in the LLM training phase (ref). Data contamination leads to inflated performance scores. The model will perform worse on new, unseen problems.

For instance, Alex Svetkin found that LLMs (e.g. claude-3-opus) can solve 92.86% of Hard Leetcode problems before its training data cutoff date, but it only solved 2.22% of the Hard problems after the cutoff date.

Similarly, authors of MLAgentBench, tests LLMs ability to solve machine learning problems and noticed that

“the success rates vary considerably; They span from 100% on well-established older datasets to as low as 0% on recent Kaggle challenges created potentially after the underlying LM was trained”.

MLE-Bench paper doesn’t report performance before vs. after each model’s cutoff date. However, the paper tries to address contamination by using (1) task similarity (measured by token probability) (2) plagiarism detector (how similar the LLM solution copied the top Kaggle solutions) (3) obfuscation of task description.

Admittedly, data contamination is also a problem for SWE-Bench (and has plagued almost all benchmarks before). Some benchmarks are becoming popular and widely accepted despite data contamination issues. But it’s important to note that MLE-Bench, like other static benchmarks, can be gamed and exaggerate the model’s performance on new, unseen tasks.

The best solution for data contamination I’ve seen so far is LiveCodeBench, which tracks models’ cutoff dates and problems’ published dates. It ensures contamination-free by testing models on new, unseen problems after their cutoff date.

Similarly, perhaps the best way to prove an ML-agent’s ability, is to have the agent participate in Kaggle competitions. If an ML-agent can consistently score medals in new Kaggle competitions, then we can be confident about its MLE capability.

Limited Data

The data is limited to improve performance on MLE skills.

(1) “Organic” Data

We have the entire internet for natural language, and GitHub for code generation. However, for MLE tasks, the “organic” data is extremely limited — there are 360 competitions on Kaggle as of Oct 2024. In contrast, as of 2022, there were over 128 million public repositories on GitHub.

(2) Synthetic Data

For SWE-type tasks, it’s possible to cheaply generate large amounts of synthetic data to improve model performance while not violating data processing inequality. The critical thing here is to have checkers to filter out incorrect answers. For instance, Llama 3 405B model used 2.7M synthetic coding examples that improved model performance. Their synthetic data generation process is: (1) Use LLM to generate problem description (2) Use LLM to generate solutions (3) Use LLMs to generate unit tests (4) Execute the generated code to ensure that it passes unit tests and has no syntax errors. (5) Ask LLM to self-reflect and correct its responses.

For MLE-type tasks, it’d be much more difficult to generate quality synthetic data. (1) The execution part requires not only “runnable” code, but also needs to improve model performance, which requires actually running on the task training data and getting a result. (2) The training data for each task needs to be curated. These data need to be “organic” because MLE tasks predict things in the real world to have an impact, instead of predicting synthetic outcomes.

To conclude:

(1) MLE skills are now targeted by OpenAI;

(2) MLE-Bench might be hard to take off because of low replicability and high cost;

(3) it’s harder for AI to get MLE skills than SWE skills.

Just for fun — I also asked claude.ai to generate a Medium blog post based on the paper. And you can check it here.